We recently introduced qualitative comparisons, giving some essential tips on how to do them right. But we’ve seen that some laboratories prefer to mix things up and that they typically base their procedures on arguments that seem pretty reasonable at first glance. Their conventions feel practical and logical, but when you look more closely, they actually have no justification for being part of validations nor verifications.

To make it easier for you to resist the outdated and unjustified workflows and instead do your verifications wisely, we decided to write this guide. We’ll cover how to handle discrepancies in qualitative comparison studies and explain why it’s essential to do it right.

How to take a view on discrepancies?

As discussed in our blog post about confidence intervals, there is always some uncertainty in a measurement. In qualitative comparison studies, this catches the eye particularly well, as some samples are interpreted as positive in one measurement and as negative in another measurement. These results are reported as false positive/negative. In most cases, it’s enough to just report the results as they are. But sometimes, it may feel worthwhile to take a closer look at the disagreeing results and try to find what caused them. What if our new method is simply better than our comparative method? What should we consider if we try to resolve the discrepancies?

Before planning our approach to handling discrepancies, we should step back for a moment and ask ourselves a key question: what is the purpose of what we are doing? We should carefully consider the following things:

-

What is the information we are trying to achieve with our comparison study?

-

How can we build the study setup that gives that information?

-

How to report the results so that the report gives a correct impression of our method?

-

What conclusions can we actually make from the data?

The relevant terms and equations are introduced in our previous blog post about qualitative comparisons.

When you want to investigate how your results will change as the measurement procedure changes

If we are, for example, comparing the performance of a new method to an old one, examining discrepancies and changing results of individual samples based on these checks will basically just distort the results. As you are reporting agreement between the methods, if you change part of the results based on reruns, your results will no longer describe the agreement between the original methods. You might have nice numbers on your report, but you cannot know what these numbers tell you, as part of the samples are telling a different story than the others.

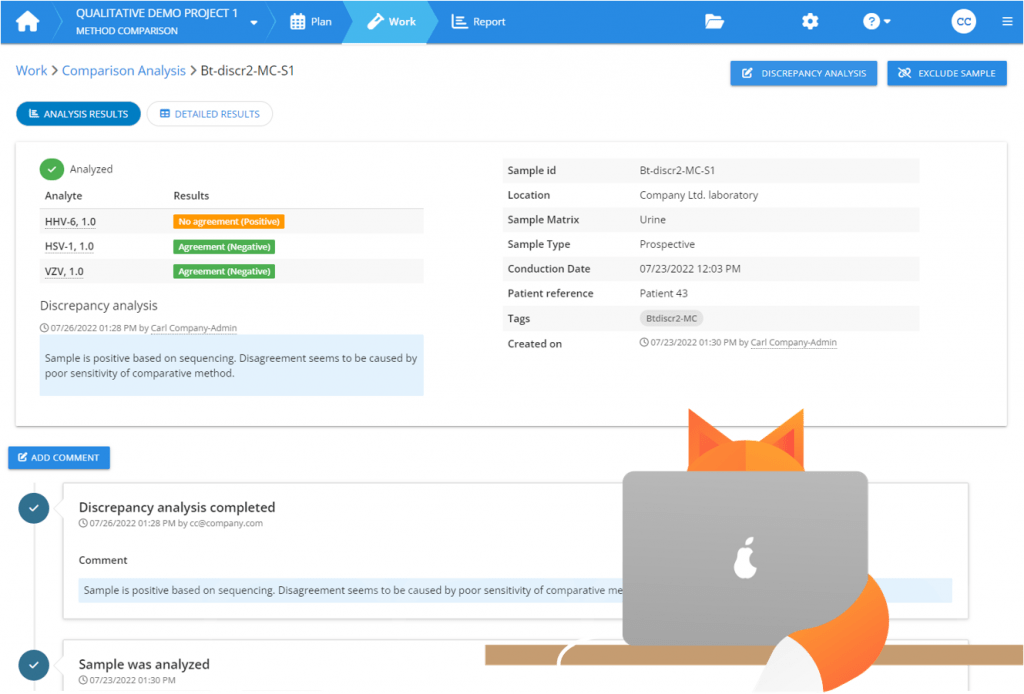

Yet, it is possible to examine part of the results in more detail. You can add the results of these checks as explanatory information to your report, giving more understanding about the differencies between the performance of the two methods. So instead of trying to “fix” the discrepancies, you can describe the additional checks that you performed to a sample and say that based on this investigation, it seems, e.g., that the reason for the disagreement is that the old method was not able to identify this positive sample as positive. In Validation Manager, you can easily add this information to your report while examining the results of an individual sample.

When you want to investigate the performance of your candidate method

How about when we are using the Diagnostic Accuracy Criteria (i.e., the true results of the samples) as a reference? If the term Diagnostic Accuracy Criteria is new for you, we recommend you to read our blog post about qualitative comparisons.

If we want to gain knowledge about the sensitivity and specificity of the candidate method, we need to be sure of all the samples within the study, whether they are negative or positive. If part of the samples makes us doubt the correctness of our reference values, it’s not enough to just measure a subset of the samples more thoroughly. And if we add more information to the study to change the result interpretations, we need to add that information for all of the samples to make sure that each and every one of them has all relevant information to form a correct result interpretation.

Again, it is possible to examine part of the results in more detail to add explanatory information to the report. For example, you might confirm that false-negative results are related to very low concentrations and evaluate the clinical relevance of not recognizing these samples as positive. In Validation Manager Diagnostic accuracy study, you can examine all results related to a sample in one view and add comments to be shown on your report. Because the automatic interpretations in a diagnostic accuracy study do not necessarily give the correct Diagnostic Accuracy Criteria for every sample, Validation Manager enables you to change the result interpretation of individual samples as you comment on them, but you should be careful when using this option. It is essential that you base your decisions on the facts that the gathered data gives you.

How to do the extra checks?

As already mentioned, you shouldn’t change your result interpretations based on any extra checks. But it may actually be a good idea to use some kind of checks to gain an understanding of why your results are what they are and what kind of consequences it may have on your laboratory’s ability to give correct information for doctors who are treating their patients. The main points that you should understand to avoid messing up your results are:

-

In method comparisons, you cannot know which ones of the discrepant results are caused by false results of the candidate method and which ones by false results of the comparative method. The same applies to instrument comparisons.

-

In method comparisons, you cannot know how many of the true positive and true negative results are related to samples that actually gave a false result with both methods. The same applies to instrument comparisons.

-

When you compare your candidate method to a Diagnostic Accuracy Criteria, you should be able to trust that your Diagnostic Accuracy Criteria gives you enough information to make a correct conclusion of all the samples, whether they are positive or negative. Otherwise, it’s not a Diagnostic Accuracy Criteria. Even if you know that your Diagnostic Accuracy Criteria is not perfect, it is still the best information available for deducing the result. If you come up with a way to complement your results to get a more accurate view of the sample, you need to use it for all the samples, and this way, make it a part of your Diagnostic Accuracy Criteria.

-

Don’t let the result of your candidate method affect which method you are using as the comparative method, whether you accept the result of your comparative method or not, nor how you interpret the results of which your Diagnostic Accuracy Criteria consists. Because if you do, you are effectively verifying your candidate method against itself.

What we mean by these statements is described in more detail below.

Don’t rerun like you are fighting the odds

Since no method is perfectly accurate, we cannot know whether the disagreement is caused by a false result given by the candidate method or by a false result given by the comparative method. If we have prior knowledge about the performance of both methods, we may be able to make educated guesses about which one of them would more likely give correct results, but still, we cannot be sure.

In addition, no reruns nor checks give perfectly correct results. They always contain some uncertainties that may cause false-positive or false-negative results. If we measure the sample again with the same method and get a different result from what we got before, we cannot know whether it was the first or the second measurement that gave the correct result.

That’s why, when reruns are used to make corrections to the reported results, it easily turns into exploiting statistics to get better-looking results. If we assume a 5% chance that our method gives a false result, from three measurements, it’s already 14.3% chance that at least one of the measurements gives a false result. If you repeat a cycle of approving the results that please you and rerunning the samples whose results you don’t like, you are basically flipping a coin until you get the result you wished for. This is not something that you should do when validating or verifying a method.

In some cases, it may be practical to measure multiple replicates and select the interpretation achieved in most of the measurements. Replicates improve results by making the effect of random error (basically imprecision related to samples near cutoff) smaller, but they do not fix flaws in sensitivity or specificity. If you decide to use replicate measurements, do it for all of the samples within your study.

To evaluate what caused the disagreement between the methods, it’s best to use a Diagnostic Accuracy Criteria to find out whether the samples really are positive or negative. Still, it is not recommended to change result interpretations based on these checks related to individual samples. In some cases, these checks can give valuable information to explain the differences between the compared methods, but it’s best used as explanatory information related to a sample. As mentioned above, changing the result interpretations of single samples changes the meaning of the values shown in your report.

Remember, concordant results can also be wrong

If both the candidate method and comparative method work well, it feels pretty unlikely that they would both give a false result for the same sample. Yet, even improbable things sometimes happen. Actually, the more data we collect, the more likely it becomes that something improbable happens at some stage of the study setup. And if e.g., the candidate method happens to have notably poor sensitivity when measuring samples from our local population, it may be that the probability of both methods giving negative results for positive samples is not so much less than the probability of our comparative method giving negative results for positive samples.

That’s why when measuring some results again, we should also consider measuring a good set of samples that gave concordant results. That way, we will get a more correct impression of the new method’s performance than just by finding reasons for disagreements.

Just like it is not recommended to change the interpretations of disagreeing samples based on additional measurements, it’s not recommended to change them in case you are doing checks for concordant results. The information you are getting from these checks may give valuable information to describe your new method, but it’s best to use it as explanatory information. But if for some reason, you think that it would be justified to change the result interpretations based on your additional checks, you definitely cannot select the samples for these checks based on whether they have disagreeing results or not. If you changed only discrepant results, you would be verifying your candidate method against itself.

Conclusion

We’ve described the principles of how to view disagreeing results in a comparison study. We’ve explained why you shouldn’t change your result interpretations based on any additional checks but only use those checks to gain understanding about the reasons behind the calculated results. We’ve also discussed things that you need to understand when planning how to handle discrepancies. We hope that this helps you do your verifications so that you understand what your report can tell you and how you can get more out of your comparisons.

Whatever you do, don’t use discrepancy resolution as a means for making your results look pretty. Be precise on what question your study setup is answering and what information you get from your checks and reruns.

If you are still unsure how to do your qualitative comparisons, or if you want more authoritative views on the subject of discreapancy resolution, we recommend you to read chapter 6 point 3 in the FDA article Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests

Accomplish more with less effort

See how Finbiosoft software services can transform the way your laboratory works.